Learning an Image Classification Model from Scratch¶

Introduction¶

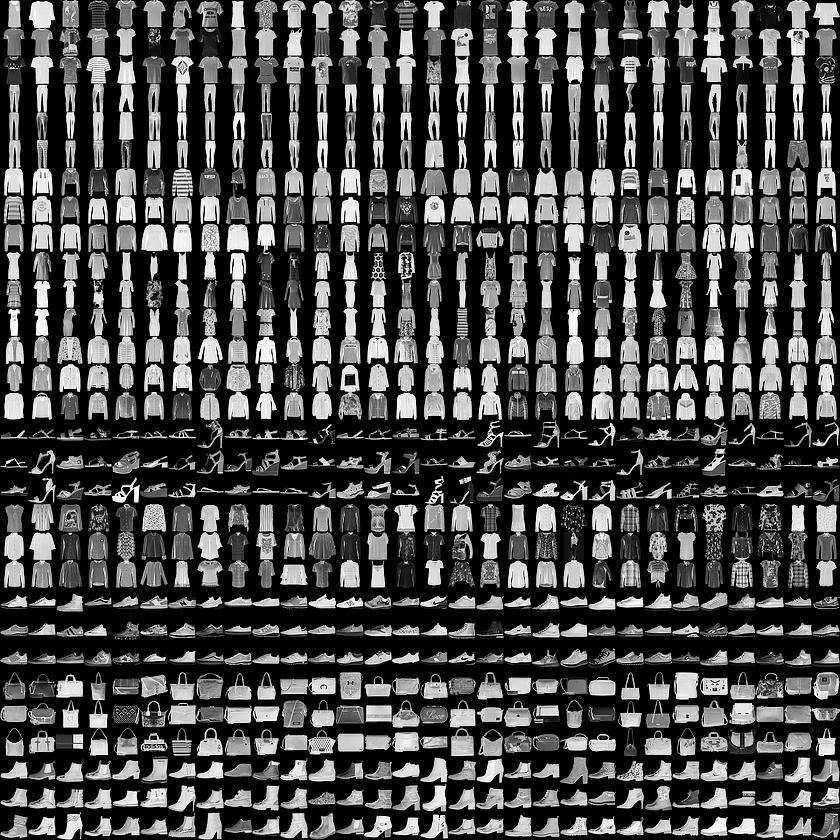

The fashion_mnist dataset consists of 70,000 images of clothing items across 10 categories.

Luckily for us, this dataset is available in a convenient format through Keras, so we will load it and take a look.

But first, let's get the usual technical preliminaries out of the way.

As we did previously, we will first import the following packages and set the seed for the random number generator.

import tensorflow as tf

from tensorflow import keras

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

# initialize the seeds of different random number generators so that the

# results will be the same every time the notebook is run

# keras.utils.set_random_seed(42)

tf.random.set_seed(42)

With the technical preliminaries out of the way, let's load the dataset and take a look.

# load data into x_train, y_train, x_test, y_test

(x_train, y_train), (x_test, y_test) = keras.datasets.fashion_mnist.load_data()

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/train-labels-idx1-ubyte.gz 29515/29515 ━━━━━━━━━━━━━━━━━━━━ 0s 0us/step Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/train-images-idx3-ubyte.gz 26421880/26421880 ━━━━━━━━━━━━━━━━━━━━ 0s 0us/step Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/t10k-labels-idx1-ubyte.gz 5148/5148 ━━━━━━━━━━━━━━━━━━━━ 0s 0us/step Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/t10k-images-idx3-ubyte.gz 4422102/4422102 ━━━━━━━━━━━━━━━━━━━━ 0s 0us/step

print(x_train.shape, y_train.shape)

(60000, 28, 28) (60000,)

There are 60,000 images in the training set, each of which is a 28x28 matrix.

print(x_test.shape, y_test.shape)

(10000, 28, 28) (10000,)

The remaining 10,000 images are in the test set.

OK, let's look at the first 10 rows of the dependent variable 𝑦 .

y_train[:10]

array([9, 0, 0, 3, 0, 2, 7, 2, 5, 5], dtype=uint8)

What do these numbers mean?

According to the fashion_mnist Github site, this is what each number 0-9 corresponds to:

| Label | Description |

|---|---|

| 0 | T-shirt/top |

| 1 | Trouser |

| 2 | Pullover |

| 3 | Dress |

| 4 | Coat |

| 5 | Sandal |

| 6 | Shirt |

| 7 | Sneaker |

| 8 | Bag |

| 9 | Ankle boot |

Create a little Python list so that we can go from numbers to descriptions easily.

# Call the list "labels"

labels = [

"T-shirt/top",

"Trouser",

"Pullover",

"Dress",

"Coat",

"Sandal",

"Shirt",

"Sneaker",

"Bag",

"Ankle boot",

]

Given a number, the description is now a simple look-up. Let's see what the very first training example is about.

labels[y_train[0]]

'Ankle boot'

The very first image is an "Ankle boot"!

Let's take a look at the raw data for the image.

x_train[0]

ndarray (28, 28)

array([[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1,

0, 0, 13, 73, 0, 0, 1, 4, 0, 0, 0, 0, 1,

1, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 3,

0, 36, 136, 127, 62, 54, 0, 0, 0, 1, 3, 4, 0,

0, 3],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 6,

0, 102, 204, 176, 134, 144, 123, 23, 0, 0, 0, 0, 12,

10, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 155, 236, 207, 178, 107, 156, 161, 109, 64, 23, 77, 130,

72, 15],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0,

69, 207, 223, 218, 216, 216, 163, 127, 121, 122, 146, 141, 88,

172, 66],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 0,

200, 232, 232, 233, 229, 223, 223, 215, 213, 164, 127, 123, 196,

229, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

183, 225, 216, 223, 228, 235, 227, 224, 222, 224, 221, 223, 245,

173, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

193, 228, 218, 213, 198, 180, 212, 210, 211, 213, 223, 220, 243,

202, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 3, 0, 12,

219, 220, 212, 218, 192, 169, 227, 208, 218, 224, 212, 226, 197,

209, 52],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 6, 0, 99,

244, 222, 220, 218, 203, 198, 221, 215, 213, 222, 220, 245, 119,

167, 56],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 4, 0, 0, 55,

236, 228, 230, 228, 240, 232, 213, 218, 223, 234, 217, 217, 209,

92, 0],

[ 0, 0, 1, 4, 6, 7, 2, 0, 0, 0, 0, 0, 237,

226, 217, 223, 222, 219, 222, 221, 216, 223, 229, 215, 218, 255,

77, 0],

[ 0, 3, 0, 0, 0, 0, 0, 0, 0, 62, 145, 204, 228,

207, 213, 221, 218, 208, 211, 218, 224, 223, 219, 215, 224, 244,

159, 0],

[ 0, 0, 0, 0, 18, 44, 82, 107, 189, 228, 220, 222, 217,

226, 200, 205, 211, 230, 224, 234, 176, 188, 250, 248, 233, 238,

215, 0],

[ 0, 57, 187, 208, 224, 221, 224, 208, 204, 214, 208, 209, 200,

159, 245, 193, 206, 223, 255, 255, 221, 234, 221, 211, 220, 232,

246, 0],

[ 3, 202, 228, 224, 221, 211, 211, 214, 205, 205, 205, 220, 240,

80, 150, 255, 229, 221, 188, 154, 191, 210, 204, 209, 222, 228,

225, 0],

[ 98, 233, 198, 210, 222, 229, 229, 234, 249, 220, 194, 215, 217,

241, 65, 73, 106, 117, 168, 219, 221, 215, 217, 223, 223, 224,

229, 29],

[ 75, 204, 212, 204, 193, 205, 211, 225, 216, 185, 197, 206, 198,

213, 240, 195, 227, 245, 239, 223, 218, 212, 209, 222, 220, 221,

230, 67],

[ 48, 203, 183, 194, 213, 197, 185, 190, 194, 192, 202, 214, 219,

221, 220, 236, 225, 216, 199, 206, 186, 181, 177, 172, 181, 205,

206, 115],

[ 0, 122, 219, 193, 179, 171, 183, 196, 204, 210, 213, 207, 211,

210, 200, 196, 194, 191, 195, 191, 198, 192, 176, 156, 167, 177,

210, 92],

[ 0, 0, 74, 189, 212, 191, 175, 172, 175, 181, 185, 188, 189,

188, 193, 198, 204, 209, 210, 210, 211, 188, 188, 194, 192, 216,

170, 0],

[ 2, 0, 0, 0, 66, 200, 222, 237, 239, 242, 246, 243, 244,

221, 220, 193, 191, 179, 182, 182, 181, 176, 166, 168, 99, 58,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 40, 61, 44, 72, 41, 35,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0]], dtype=uint8)Let's look at the first 25 images using the handy plt.imshow() command

# You'll create two variables namely "fig" and "ax" as shown in the screencast.

fig = plt.figure(figsize=(30, 10))

for i in range(25):

ax = fig.add_subplot(5, 5, i + 1, xticks=[], yticks=[])

ax.set_title(f"{labels[y_train[i]]}")

ax.imshow(x_train[i], cmap="gray")

The images are a bit small but they will do for now.

A NN Model - First Attempt¶

Our first NN will be a simple one with a single hidden layer.

Data Prep¶

Tip: NNs learn best when each independent variable is in a small range. So, standardize them by either

- subtracting the mean and dividing by the standard deviation or

- if they are in a guaranteed range, just divide by the max value.

The inputs here range from 0 to 255. Let's normalize to the 0-1 range by dividing everything by 255.

# Standardize x_train and x_test

x_train = x_train / 255.0

x_test = x_test / 255.0

Define Model in Keras¶

As we saw in the previous module, creating an NN is usually just a few lines of Keras code.

- The input will be 28 x 28 matrices of numbers. These will have to be flattened into a long vector and then fed to the hidden layer.

- We will start with a single hidden layer of 256 ReLU neurons.

- Since this is a multi-class classification problem (e.g., we need to predict one of 10 clothing categories), the output layer has to produce a 10-element vector of probabilities that sum up to 1.0 => we will use the softmax layer that we learned about in the previous lecture.

# define the input layer

input = keras.Input(shape=(28, 28))

# convert the 28 x 28 matrix of numbers into a long vector

h = keras.layers.Flatten()(input)

# feed the long vector to the hidden layer

h = keras.layers.Dense(256, activation="relu", name="Hidden")(h)

# feed the output of the hidden layer to the output layer

output = keras.layers.Dense(10, activation="softmax", name="Output")(h)

# tell Keras that this (input,output) pair is your model

model = keras.Model(input, output)

model.summary()

Model: "functional"

┏━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━┓ ┃ Layer (type) ┃ Output Shape ┃ Param # ┃ ┡━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━┩ │ input_layer (InputLayer) │ (None, 28, 28) │ 0 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ flatten (Flatten) │ (None, 784) │ 0 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ Hidden (Dense) │ (None, 256) │ 200,960 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ Output (Dense) │ (None, 10) │ 2,570 │ └─────────────────────────────────┴────────────────────────┴───────────────┘

Total params: 203,530 (795.04 KB)

Trainable params: 203,530 (795.04 KB)

Non-trainable params: 0 (0.00 B)

Let's hand-calculate the number of parameters to verify.

# calculate the number of parameters and set the output to "parameters"

parameters = (784 * 256 + 256) + (256 * 10 + 10)

print(parameters)

203530

Set Optimization Parameters¶

Now that the model is defined, we need to tell Keras three things:

- What loss function to use

- Which optimizer to use - we will again use Adam which is an excellent set-and-forget choice

- What metrics you want Keras to report out - in classification problems like this one, Accuracy is usually the metric you want to see.

Since our output variable is categorical with 10 levels, we will select the sparse_categorical_crossentropy loss function.

# Compile your model

model.compile(

loss="sparse_categorical_crossentropy", optimizer="adam", metrics=["accuracy"]

)

Train the Model¶

- The batch size: 32 or 64 are commonly used

- The number of epochs i.e., how many passes through the training data: start with 10-20.

OK, let's train the model using the model.fit function!

# fit your model first try with a batch size of 32 and 10 epochs

batch_size = 64

epochs = 10

model.fit(x_train, y_train, batch_size=batch_size, epochs=epochs)

Epoch 1/10 938/938 ━━━━━━━━━━━━━━━━━━━━ 8s 7ms/step - accuracy: 0.7800 - loss: 0.6253 Epoch 2/10 938/938 ━━━━━━━━━━━━━━━━━━━━ 5s 6ms/step - accuracy: 0.8624 - loss: 0.3846 Epoch 3/10 938/938 ━━━━━━━━━━━━━━━━━━━━ 6s 7ms/step - accuracy: 0.8775 - loss: 0.3395 Epoch 4/10 938/938 ━━━━━━━━━━━━━━━━━━━━ 10s 7ms/step - accuracy: 0.8847 - loss: 0.3120 Epoch 5/10 938/938 ━━━━━━━━━━━━━━━━━━━━ 9s 6ms/step - accuracy: 0.8923 - loss: 0.2913 Epoch 6/10 938/938 ━━━━━━━━━━━━━━━━━━━━ 10s 6ms/step - accuracy: 0.8983 - loss: 0.2741 Epoch 7/10 938/938 ━━━━━━━━━━━━━━━━━━━━ 10s 6ms/step - accuracy: 0.9045 - loss: 0.2588 Epoch 8/10 938/938 ━━━━━━━━━━━━━━━━━━━━ 10s 6ms/step - accuracy: 0.9094 - loss: 0.2457 Epoch 9/10 938/938 ━━━━━━━━━━━━━━━━━━━━ 5s 6ms/step - accuracy: 0.9140 - loss: 0.2345 Epoch 10/10 938/938 ━━━━━━━━━━━━━━━━━━━━ 11s 6ms/step - accuracy: 0.9167 - loss: 0.2241

<keras.src.callbacks.history.History at 0x7a82668da9d0>

Evaluate the Model¶

You can see from the above that our model achieves over 91% accuracy on the train set but, as we know, doing well on the training set isn't all that impressive due to the possibility of overfitting. So the real question is how well does it do on the test set?

model.evaluate is a very handy function to calculate the performance of your model on any dataset.

# Evaluate model on test data set

model.evaluate(x_test, y_test)

313/313 ━━━━━━━━━━━━━━━━━━━━ 1s 3ms/step - accuracy: 0.8790 - loss: 0.3467

[0.3425934910774231, 0.8804000020027161]

Did the NNs we create take advantage of the fact that the input data is images?

A Convolutional Neural Network¶

Convolutional Layers:

Convolutional (typically abbreviated to "conv") layers were the key breakthrough that led to all the exciting advances in AI for Computer Vision problems like Image Classification, Image Recognition etc. They were designed to specifically work with images.

Conv layers are the reason why your iPhone can recognize your face!

We will follow the same sequence of steps as we did above:

- Data Prep

- Define Model

- Set Optimization Parameters

- Train Model

- Evaluate Model

Data Prep¶

The data has already been normalized so that the numbers are between 0 and 1. We don't need to do it again.

x_train.shape

(60000, 28, 28)

For reasons that will become clear when you work with color images, we also need to add another dimension to each example so that it goes from 28x28 to 28x28x1

# add another dimension to x_train and x_test

x_train = np.expand_dims(x_train, -1)

x_test = np.expand_dims(x_test, -1)

x_train.shape

(60000, 28, 28, 1)

Define Model¶

OK, we are ready to create our very first Convolutional Neural Network (CNN)!

input = keras.Input(shape=x_train.shape[1:])

# first convolutional block

x = keras.layers.Conv2D(32, kernel_size=(2, 2), activation="relu", name="Conv_1")(

input

) # convolutional layer

x = keras.layers.MaxPool2D()(x) # pooling layer

# second convolutional block

x = keras.layers.Conv2D(32, kernel_size=(2, 2), activation="relu", name="Conv_2")(

x

) # convolutional layer

x = keras.layers.MaxPool2D()(x) # pooling layer

# Flatten the layers

x = keras.layers.Flatten()(x)

x = keras.layers.Dense(256, activation="relu")(x)

# create an output called "output"

output = keras.layers.Dense(10, activation="softmax")(x)

model = keras.Model(input, output)

model.summary()

Model: "functional_1"

┏━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━┓ ┃ Layer (type) ┃ Output Shape ┃ Param # ┃ ┡━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━┩ │ input_layer_1 (InputLayer) │ (None, 28, 28, 1) │ 0 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ Conv_1 (Conv2D) │ (None, 27, 27, 32) │ 160 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ max_pooling2d (MaxPooling2D) │ (None, 13, 13, 32) │ 0 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ Conv_2 (Conv2D) │ (None, 12, 12, 32) │ 4,128 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ max_pooling2d_1 (MaxPooling2D) │ (None, 6, 6, 32) │ 0 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ flatten_1 (Flatten) │ (None, 1152) │ 0 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ dense (Dense) │ (None, 256) │ 295,168 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ dense_1 (Dense) │ (None, 10) │ 2,570 │ └─────────────────────────────────┴────────────────────────┴───────────────┘

Total params: 302,026 (1.15 MB)

Trainable params: 302,026 (1.15 MB)

Non-trainable params: 0 (0.00 B)

Set Optimization Parameters¶

# Compile model using sparse_categorical_crossentropy

# and adam, and accuracy as a metric

model.compile(

loss="sparse_categorical_crossentropy", optimizer="adam", metrics=["accuracy"]

)

Train the Model¶

DISCLAIMER: This will take some time to complete

# Train the model with either 32 or 64 as the batch size and using 10 epochs

model.fit(x_train, y_train, batch_size=64, epochs=10)

Epoch 1/10 938/938 ━━━━━━━━━━━━━━━━━━━━ 39s 40ms/step - accuracy: 0.7675 - loss: 0.6546 Epoch 2/10 938/938 ━━━━━━━━━━━━━━━━━━━━ 41s 40ms/step - accuracy: 0.8804 - loss: 0.3286 Epoch 3/10 938/938 ━━━━━━━━━━━━━━━━━━━━ 41s 41ms/step - accuracy: 0.8973 - loss: 0.2795 Epoch 4/10 938/938 ━━━━━━━━━━━━━━━━━━━━ 39s 39ms/step - accuracy: 0.9090 - loss: 0.2489 Epoch 5/10 938/938 ━━━━━━━━━━━━━━━━━━━━ 40s 37ms/step - accuracy: 0.9168 - loss: 0.2249 Epoch 6/10 938/938 ━━━━━━━━━━━━━━━━━━━━ 36s 39ms/step - accuracy: 0.9252 - loss: 0.2046 Epoch 7/10 938/938 ━━━━━━━━━━━━━━━━━━━━ 36s 39ms/step - accuracy: 0.9318 - loss: 0.1847 Epoch 8/10 938/938 ━━━━━━━━━━━━━━━━━━━━ 37s 39ms/step - accuracy: 0.9387 - loss: 0.1667 Epoch 9/10 938/938 ━━━━━━━━━━━━━━━━━━━━ 37s 40ms/step - accuracy: 0.9453 - loss: 0.1496 Epoch 10/10 938/938 ━━━━━━━━━━━━━━━━━━━━ 40s 39ms/step - accuracy: 0.9510 - loss: 0.1346

<keras.src.callbacks.history.History at 0x7a82670ce110>

Evaluate the Model¶

# Get the score of the model

score = model.evaluate(x_test, y_test)

print("Test accuracy:", score[1])

313/313 ━━━━━━━━━━━━━━━━━━━━ 3s 7ms/step - accuracy: 0.9022 - loss: 0.3132 Test accuracy: 0.9072999954223633

Back to Fashion MNIST. Let's see what the state of the art (SOTA) accuracy is.

It is 96.91%!

Challenge: Can you get to SOTA by playing around with the architecture of the network?

Conclusion¶

We have built a Deep Learning model that can classify grayscale images of clothing items with over 90% accuracy!!